DataGrabber and DataSink explained¶

In this chapter we will try to explain the concepts behind DataGrabber and DataSink.

Why do we need these interfaces?¶

A typical workflow takes data as input and produces data as the result of one or more operations. One can set the data required by a workflow directly as illustrated below.

from fsl_tutorial2 import preproc

preproc.base_dir = os.path.abspath('.')

preproc.inputs.inputspec.func = os.path.abspath('data/s1/f3.nii')

preproc.inputs.inputspec.struct = os.path.abspath('data/s1/struct.nii')

preproc.run()

Typical neuroimaging studies require running workflows on multiple subjects or different parameterizations of algorithms. One simple approach to that would be to simply iterate over subjects.

from fsl_tutorial2 import preproc

for name in subjects:

preproc.base_dir = os.path.abspath('.')

preproc.inputs.inputspec.func = os.path.abspath('data/%s/f3.nii'%name)

preproc.inputs.inputspec.struct = os.path.abspath('data/%s/struct.nii'%name)

preproc.run()

However, in the context of complex workflows and given that users typically arrange their imaging and other data in a semantically hierarchical data store, an alternative mechanism for reading and writing the data generated by a workflow is often necessary. As the names suggest DataGrabber is used to get at data stored in a shared file system while DataSink is used to store the data generated by a workflow into a hierarchical structure on disk.

DataGrabber¶

DataGrabber is an interface for collecting files from hard drive. It is very flexible and supports almost any file organization of your data you can imagine.

You can use it as a trivial use case of getting a fixed file. By default, DataGrabber stores its outputs in a field called outfiles.

import nipype.interfaces.io as nio

datasource1 = nio.DataGrabber()

datasource1.inputs.base_directory = os.getcwd()

datasource1.inputs.template = 'data/s1/f3.nii'

datasource1.inputs.sort_filelist = True

results = datasource1.run()

Or you can get at all uncompressed NIfTI files starting with the letter ‘f’ in all directories starting with the letter ‘s’.

datasource2.inputs.base_directory = '/mass'

datasource2.inputs.template = 'data/s*/f*.nii'

datasource1.inputs.sort_filelist = True

Two special inputs were used in these previous cases. The input base_directory indicates in which directory to search, while the input template indicates the string template to match. So in the previous case datagrabber is looking for path matches of the form /mass/data/s*/f*.

Note

When used with wildcards (e.g., s* and f* above) DataGrabber does not return data in sorted order. In order to force it to return data in sorted order, one needs to set the input sorted = True. However, when explicitly specifying an order as we will see below, sorted should be set to False.

More useful cases arise when the template can be filled by other inputs. In the example below, we define an input field for datagrabber called run. This is then used to set the template (see %d in the template).

datasource3 = nio.DataGrabber(infields=['run'])

datasource3.inputs.base_directory = os.getcwd()

datasource3.inputs.template = 'data/s1/f%d.nii'

datasource1.inputs.sort_filelist = True

datasource3.inputs.run = [3, 7]

This will return files basedir/data/s1/f3.nii and basedir/data/s1/f7.nii. We can take this a step further and pair subjects with runs.

datasource4 = nio.DataGrabber(infields=['subject_id', 'run'])

datasource4.inputs.template = 'data/%s/f%d.nii'

datasource1.inputs.sort_filelist = True

datasource4.inputs.run = [3, 7]

datasource4.inputs.subject_id = ['s1', 's3']

This will return files basedir/data/s1/f3.nii and basedir/data/s3/f7.nii.

A more realistic use-case¶

In a typical study one often wants to grab different files for a given subject and store them in semantically meaningful outputs. In the following example, we wish to retrieve all the functional runs and the structural image for the subject ‘s1’.

datasource = nio.DataGrabber(infields=['subject_id'], outfields=['func', 'struct'])

datasource.inputs.base_directory = 'data'

datasource.inputs.template = '*'

datasource1.inputs.sort_filelist = True

datasource.inputs.field_template = dict(func='%s/f%d.nii',

struct='%s/struct.nii')

datasource.inputs.template_args = dict(func=[['subject_id', [3,5,7,10]]],

struct=[['subject_id']])

datasource.inputs.subject_id = 's1'

Two more fields are introduced: field_template and template_args. These fields are both dictionaries whose keys correspond to the outfields keyword. The field_template reflects the search path for each output field, while the template_args reflect the inputs that satisfy the template. The inputs can either be one of the named inputs specified by the infields keyword arg or it can be raw strings or integers corresponding to the template. For the func output, the %s in the field_template is satisfied by subject_id and the %d is field in by the list of numbers.

Note

We have not set sorted to True as we want the DataGrabber to return the functional files in the order it was specified rather than in an alphabetic sorted order.

DataSink¶

A workflow working directory is like a cache. It contains not only the outputs of various processing stages, it also contains various extraneous information such as execution reports, hashfiles determining the input state of processes. All of this is embedded in a hierarchical structure that reflects the iterables that have been used in the workflow. This makes navigating the working directory a not so pleasant experience. And typically the user is interested in preserving only a small percentage of these outputs. The DataSink interface can be used to extract components from this cache and store it at a different location. For XNAT-based storage, see XNATSink .

Note

Unlike other interfaces, a DataSink‘s inputs are defined and created by using the workflow connect statement. Currently disconnecting an input from the DataSink does not remove that connection port.

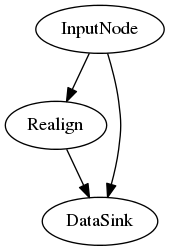

Let’s assume we have the following workflow.

The following code segment defines the DataSink node and sets the base_directory in which all outputs will be stored. The container input creates a subdirectory within the base_directory. If you are iterating a workflow over subjects, it may be useful to save it within a folder with the subject id.

datasink = pe.Node(nio.DataSink(), name='sinker')

datasink.inputs.base_directory = '/path/to/output'

workflow.connect(inputnode, 'subject_id', datasink, 'container')

If we wanted to save the realigned files and the realignment parameters to the same place the most intuitive option would be:

workflow.connect(realigner, 'realigned_files', datasink, 'motion')

workflow.connect(realigner, 'realignment_parameters', datasink, 'motion')

However, this will not work as only one connection is allowed per input port. So we need to create a second port. We can store the files in a separate folder.

workflow.connect(realigner, 'realigned_files', datasink, 'motion')

workflow.connect(realigner, 'realignment_parameters', datasink, 'motion.par')

The period (.) indicates that a subfolder called par should be created. But if we wanted to store it in the same folder as the realigned files, we would use the .@ syntax. The @ tells the DataSink interface to not create the subfolder. This will allow us to create different named input ports for DataSink and allow the user to store the files in the same folder.

workflow.connect(realigner, 'realigned_files', datasink, 'motion')

workflow.connect(realigner, 'realignment_parameters', datasink, 'motion.@par')

The syntax for the input port of DataSink takes the following form:

string[[.[@]]string[[.[@]]string] ...]

where parts between paired [] are optional.

MapNode¶

In order to use DataSink inside a MapNode, it’s inputs have to be defined inside the constructor using the infields keyword arg.

Parameterization¶

As discussed in MapNode, iterfield, and iterables explained, one can run a workflow iterating over various inputs using the iterables attribute of nodes. This means that a given workflow can have multiple outputs depending on how many iterables are there. Iterables create working directory subfolders such as _iterable_name_value. The parameterization input parameter controls whether the data stored using DataSink is in a folder structure that contains this iterable information or not. It is generally recommended to set this to True when using multiple nested iterables.

Substitutions¶

The substitutions and substitutions_regexp inputs allow users to modify the output destination path and name of a file. Substitutions are a list of 2-tuples and are carried out in the order in which they were entered. Assuming that the output path of a file is:

/root/container/_variable_1/file_subject_realigned.nii

we can use substitutions to clean up the output path.

datasink.inputs.substitutions = [('_variable', 'variable'),

('file_subject_', '')]

This will rewrite the file as:

/root/container/variable_1/realigned.nii

Note

In order to figure out which substitutions are needed it is often useful to run the workflow on a limited set of iterables and then determine the substitutions.